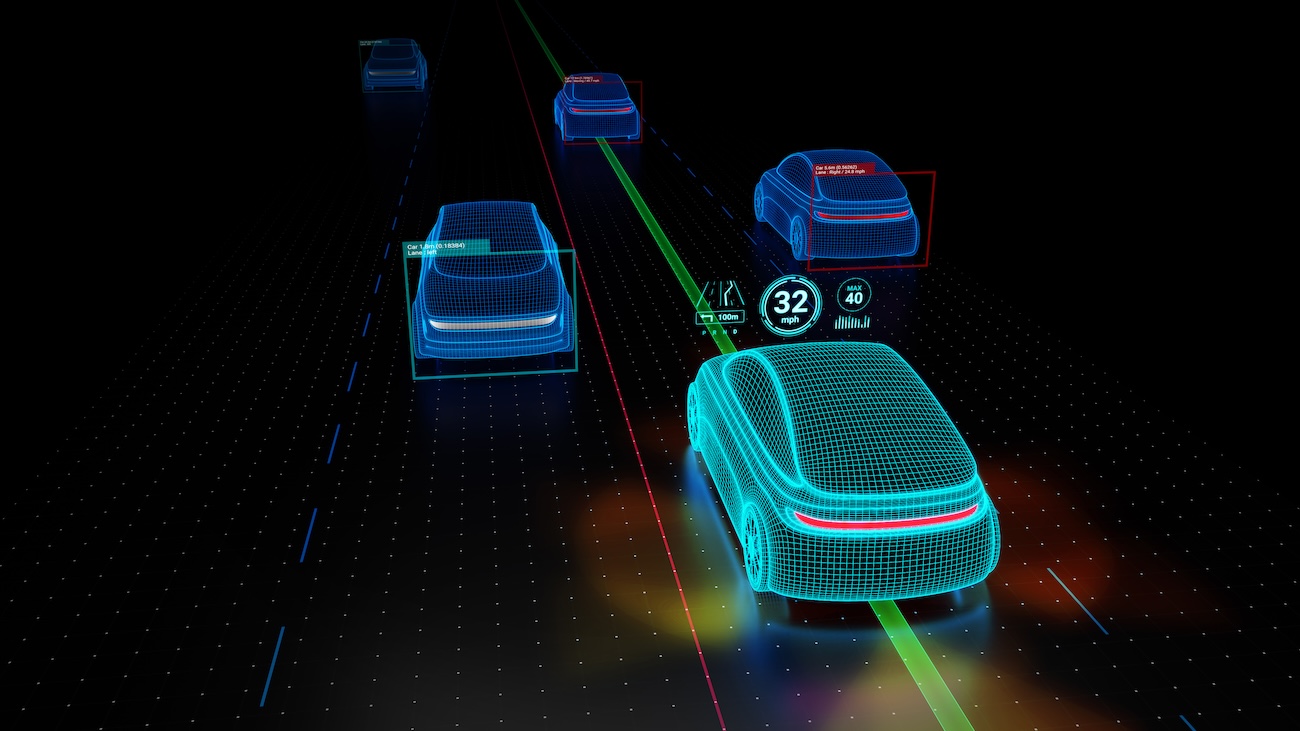

Autonomous cars are one of the AI visions of the future. Self-driving vehicles can eliminate accidents, increase efficiency, eliminate traffic jams, save time, and make our work commute more pleasant. However, the vision of a fully autonomous car has not materialized yet.

As of 2024, the highest level of autonomy commercially available is Level 3, with Mercedes-Benz’s Drive Pilot leading the way in limited markets. This system operates under specific conditions, including daylight and clear weather, on pre-approved roads in California and Nevada.

Most vehicles marketed as self-driving, including Tesla’s Self-Driving system, remain at Level 2, requiring constant driver supervision.

One significant roadblock to the development of fully autonomous cars is the ethical decisions that cars must make on the road. To examine these moral choices, MIT developed a moral machine, a tool designed to test the decisions of self-driving vehicles. Once on the road, fully autonomous cars will need to make millions of decisions, such as deciding which obstacles to avoid, how to protect human lives, how to navigate safely, and, in the worst-case scenario, who to injure if there are no other options. Complicating this further are cultural differences, which can influence perceptions of right and wrong in these life-or-death scenarios. For instance, societal norms may prioritize different values, such as protecting the young over the elderly, which adds another layer of complexity to programming ethical frameworks in autonomous vehicles.

To study ethical decision-making for autonomous cars, we can draw inspiration from classical ethical theories:

| Ethical Theory | Key Principles | Application to Autonomous Vehicles | Founding Philosophers |

|---|---|---|---|

| Utilitarianism | – Focus on maximizing overall happiness or minimizing harm. – Actions judged by their consequences. | – Autonomous vehicles programmed to minimize total casualties. – May prioritize saving the most significant number of people, even sacrificing a passenger. | – Jeremy Bentham (1748–1832): Introduced the principle of utility. – John Stuart Mill (1806–1873): Emphasized societal welfare and quality of happiness. |

| Deontology (Rule-Based) | – Actions are guided by rules or duties, regardless of outcomes. – Certain rights and principles are inviolable. | – Autonomous vehicles follow strict rules, such as not intentionally harming individuals. – May avoid making decisions based purely on outcomes. | – Immanuel Kant (1724–1804): Advocated duty and universal moral laws through the Categorical Imperative. |

| Social Contract Theory | – Ethical decisions arise from agreements made for mutual benefit. – Prioritizes societal norms and shared responsibilities. | – Autonomous vehicles align with public expectations and laws. – Decisions balance individual safety and societal good based on a “contract” of trust. | – Thomas Hobbes (1588–1679): Advocated social contracts to escape chaos. – John Locke (1632–1704): Emphasized natural rights and mutual agreements. – Jean-Jacques Rousseau (1712–1778): Highlighted the “general will” for collective good. |

- Which ethical framework is the most suitable for autonomous vehicles: utilitarianism, deontology (rule-based), or social contract theory?

- Should a globally agreed-upon ethical standard exist for AI decision-making, or should these principles vary by cultural and regional norms?

- Try the moral machine and describe your conclusions after comparing them with your team.

Resources

D’Amato, A., Dancel, S., Pilutti, J., Tellis, L., & Frascaroli, E. (2022). Exceptional driving principles for autonomous vehicles. Journal of Law and Mobility, 2022. Retrieved from https://repository.law.umich.edu/jlm/vol2022/iss1/2/